Data Pipelining & ETL is in a compelling position to manage enterprise. In the last few years, many characteristics of the ETL pipeline have undergone use alterations. Many enterprises are consistently developing the data management process with machine learning or ML and modern data pipelines. At the same time, the amount of accessible data is growing heavily throughout the year. At the same time pipeline data goes through validation, transformation, normalization, and other processes. Here, the data lines will help move data from one place to another.

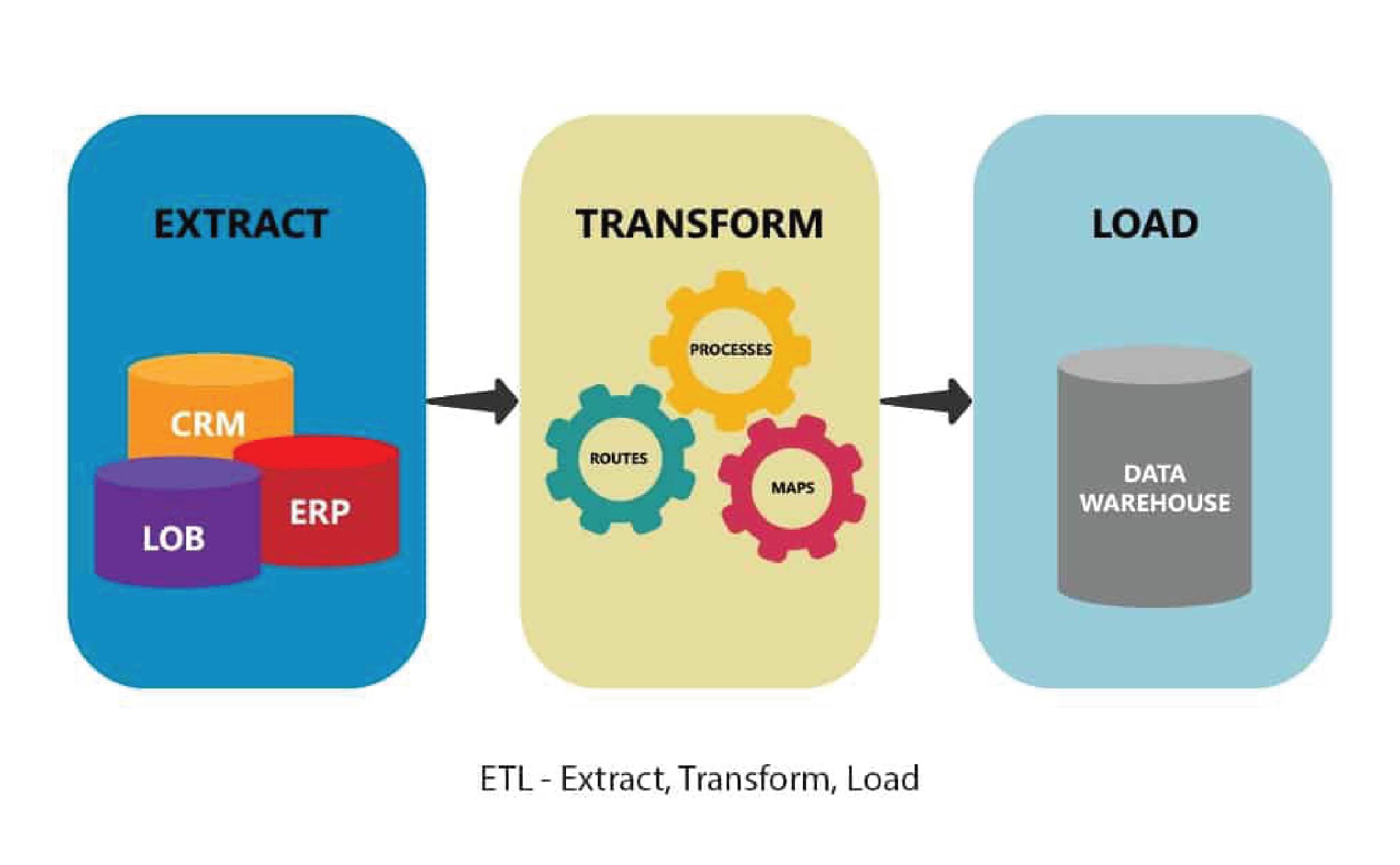

ETL means to extract. So, the definition of the ETL pipeline, which will include extracting data from different sources, is included. Subsequently, the data is generated. The data will be loaded, such as a data mart, data warehouse, or database for analysis or other objectives. When the data extraction runs, the system ingests data from different sources. The sources will be applications, business systems, sensors, and databases. In the next stage, the raw data will be transformed. It is very essential by the end of the application. Finally, the transformed data will be loaded into a target data database or warehouse. Again, it may be published as an API. Also, it can be shared with the stakeholders. Building the ETL pipeline aims to prepare the correct data for reporting and save it for first and easy access and analysis.

The ETL pipelines are utilized in different data processes. They are as follows:

Data integration

Data warehousing

Data transformation

Data quality management

Businesses can use the ETL pipeline in many cases. For example, it will help you to make high quality decisions and deliver faster results. With the help of ETL pipelines, you can centralize all data sources. They will enable the company to view an integrated version of the data assets. For example, the CRM department may use the ETL pipeline to collect customer status from various touch points during the customer journey. Again, it will support the department to create dashboards with detailed information. It will act as a single data source for all the customers’ information on different platforms. Again, most of the time, there is a requirement to move and transform data internally within multiple data stores. Businesses need help to analyze and make sense of data from different information systems.

The meaning of the context of history is that companies will review their evolution through the eyes of their data. Again, data repositories have all the recent data from systems that are implemented new. Also, which is older than the systems, the system combining with, combining, new and old lets the companies compare past and present figures. It will help them understand the factors better, such as customer requirements and market trends. It can relate the informed decisions to marketing and production as well.

The United point of view means that all a company’s data sets are available in one repository. There will be data from various sources and of different types. Here, consolidation makes it easily visualized, as anyone can review the data in one place. At the same time, it will facilitate the analysis and understanding of the data. In addition, it will be faster as it can remove delays related to the locating information between various databases.

If you use the specialized ETL software, it will improve productivity as it will allow the user to automate all the repeatable processes. Again, the software’s goal is to transport their data to repositories. It will require less time than before, and a code is required. Also, it will only need a little or a few technical skills. So you can concentrate on much more important tasks that are profitable for the organization.

A data pipeline refers to the steps involved in moving data from a source system toward the target system. Among these steps is copying and transferring data from an external location into the repositories, which will combine the data with other data sources. Here the data pipeline’s main objective is to ensure that all the steps happen regularly to all data. If you can manage the data pipeline tool accurately, the pipeline draft will offer your company access to regular, structured datasets for perfect analysis. The data engineer’s task is to manage information from various sources. After that, they use it to systematize data transfer and transformation. The AWS data pipeline will let users freely transfer data on multiple storage resources and between AWS on-premises data.

With the help of a data pipeline, you can analyze data insights properly. It is essential for people who depend on various siloed data resources, need real-time data analysis, or have the data stored on their cloud storage. For instance, different data pipelines may complete predictive analysis to understand proper Predictive trends. The predictive analysis is essential for a production department. It will help the department learn when the raw material will be finished. Also it will help to forecast which will delay the delivery. So, when you utilize competent data pipeline tools, they will provide you with the right insights. As a result, the operational performance of your department will increase manifold.

Nowadays, businesses are running at horsepower speeds. That is why real-time businesses need data access. The data pipeline will help those by providing a regular data flow. It will let the company react to the market chains with excitement. As a result, it will be able to make valuable decisions. In this time, you cannot delay any business deal. That is why you have to get help from the data pipeline so that you can finish everything quickly.

The in-depth insights you will get from the analysis are accurate, like the data within the analytical tools. Here, the data pipelines automate data preparation so that they can complete delivery to these tools. It ensures that the insights are obtained according to the most recent and will process available data. Also, it increases the analytical ability of your business, which will lead to accurate forecasting, trend analysis, and strategic planning.

Data quality should be very good when you use Data for every task. A standard pipeline will regularly help you clean and store data sources. It will automatically finish the detective work and correct mistakes. They provide security and convenience. So that is why you don’t have to make decisions based on faulty data.

Generally, you will want your business to incorporate advanced technology with advanced AI and machine learning. At the same time, you must recognize the data pipeline because it has a very essential role. So, when you use this technology, you will get well-curated data to make them work efficiently. The data pipeline will provide all the data you need and ensure it is in the proper form so that you can empower complex management systems.

The focus on cloud data security and compliance is increasing daily, so your business must ensure that data processes meet regulatory standards. Also, data pipelines support compliance by providing a well-controlled and transparent data flow. With it, you will get easy audit and governance control trails. In this way, you can maintain regulations and maintain a relationship of trust with your stakeholders.

When your business grows, data also grows with it. This ability guarantees that your data infrastructure will not break under any pressure. The data pipeline design was made to scale and handle the increased number of data without any hassles. It will help you to expand your business consistently. Again, the pipelines can easily be added to different data types and resources. It offers the flexibility you need for a dynamic data atmosphere.

When you are prone to decision-making to a resource-intensive extent at the system, on the other hand, pipelines help to manage human resources through automated data flows, helping a team focus on more important tasks. For instance, resources are maximized, and resources spent well save money and make the workforce more efficient.

Data effectively differentiates between success and failure. With the support of a data pipeline, you can prove your business can share data for competitive benefits, which will provide the insights required to optimize mixed operations, innovate, and deliver increased customer experience.

We provide Data Pipelining & ETL services to transform their data and make them the method of increasing their workforce. Their feedback earned us many national and international awards.

Bliz IT provided us with the best Data Pipelining & ETL services. It has improved all the data we can process. Now, we could create a consistent workforce to ensure accurate data. We are enhancing performance as an essential management strategy.

Data Manager

The team Bliz IT provided the reason for our surprising data accuracy with their Data Pipelining & ETL services. Their professionals helped us handle massive amounts of data, allowing us to gain in-depth insights and make effective decisions.

Chief Data Officer

After working with Bliz IT, we fulfilled many of our requirements. Their exceptional service and personalized strategies met our requirements, allowing us to complete data processing very smoothly. It was a more effective and trustworthy process. Thanks a lot to Bliz IT!

IT Director

With Bliz IT’s expert Data Pipeline & ETL services, we could get immense help in our data pipeline process. At the same time, we have accurate data to maintain our operations and are now more capable of executing and loading data perfectly. This data could ensure our timely analytics and reporting.

Analytics Lead

BlizIT is a dynamic and innovative tech company dedicated to providing comprehensive digital solutions for businesses of all sizes. Our goal is to empower businesses with cutting-edge technology, increase online visibility, and drive sustainable growth in the digital space.

© 2024 All Rights Reserved. Powered by Blizit